Experts in artificial intelligence (AI) are gathering in San Francisco to talk about how to keep models safe, but uncertainty from the incoming Trump administration overshadows their work.

Government scientists and artificial intelligence (AI) experts are meeting in the US this week as questions about the industry’s future loom ahead of President-elect Donald Trump’s second term in the White House.

Officials from the US and its allies are hoping to talk about how to better detect and combat a flood of AI-generated deepfakes fueling fraud, harmful impersonation and sexual abuse.

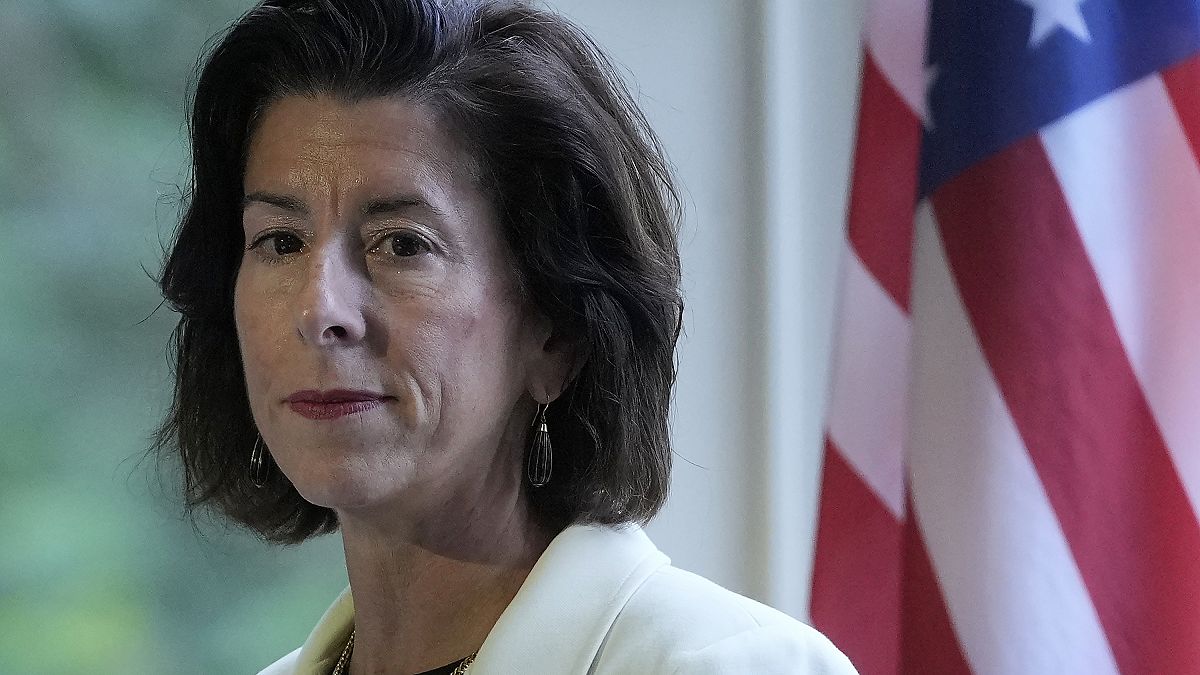

“We have a choice,” said US Commerce Secretary Gina Raimondo to the crowd of attendees on Wednesday. “We are the ones developing this technology. You are the ones developing this technology. We can decide what it looks like”.

The meeting was the first of the International Network of AI Safety Institutes, which was announced during the AI summit in Seoul in May.

The lingering uncertainty comes from Trump’s camp, who promised to “repeal Joe Biden’s dangerous Executive Order that hinders AI Innovation, and imposes Radical Leftwing ideas on the development of this technology”.

Biden signed a sweeping AI executive order last year and this year formed the new AI Safety Institute at the National Institute for Standards and Technology, which is part of the Commerce Department.

But Trump hasn’t made clear what about the order he dislikes or what he’d do about the AI Safety Institute. Trump’s transition team didn’t respond to emails this week seeking comment.

Trump didn’t spend much time talking about AI during his four years as president, though in 2019 he became the first to sign an executive order about AI. It directed federal agencies to prioritise research and development in the field.

‘Safety is good for innovation’

Addressing concerns about slowing down innovation, Raimondo said she wanted to make it clear that the US AI Safety Institute is not a regulator and also “not in the business of stifling innovation”.

“But here’s the thing. Safety is good for innovation. Safety breeds trust. Trust speeds adoption. Adoption leads to more innovation,” she said.

Some experts expect the kind of technical work happening at an old military officers’ club at San Francisco’s Presidio National Park this week to proceed regardless of who’s in charge.

“There’s no reason to believe that we’ll be doing a 180 when it comes to the work of the AI Safety Institute,” said Heather West, a senior fellow at the Center for European Policy Analysis. Behind the rhetoric, she said there’s already been overlap.

Raimondo and other officials sought to press home the idea that AI safety is not a partisan issue.

“And by the way, this room is bigger than politics. Politics is on everybody’s mind. I don’t want to talk about politics. I don’t care what political party you’re in, this is not in Republican interest or Democratic interest,” she said.

“It’s frankly in no one’s interest anywhere in the world, in any political party, for AI to be dangerous, or for AI to in get the hands of malicious non-state actors that want to cause destruction and sow chaos.”